The Merge and crypto's electricity consumption

How much energy use did Ethereum's transition to proof-of-stake really reduce

TLDR;

The Merge likely decreased global electricity consumption by 0.03-0.06% rather than the 0.2% estimates circulated by members of the Ethereum Foundation.

The Cambridge Bitcoin Energy Consumption Index must be adjusted for rising global energy prices and is currently overestimating the stable electricity consumption of the network by about 15%.

This analysis has been open-sourced and is available here.

GPUs that migrated from Ethereum to other GPU-mined chains have decimated the profitability of mining those cryptoassets and will likely only mine in the short-term.

Estimated reading time: about 30 minutes.

Writing Outline:

Models of Bitcoin’s Energy Consumption

Cambridge Bitcoin Electricity Consumption Index (CBECI)

Rising electricity prices

Model adjustments for rising electricity prices

A Simplified Bitcoin Hash Rate Model

Increasing ASIC efficiency

Directional estimates of energy consumption

Timeseries of profitability of various mining machines

Models of Ethereum’s (Pre-Merge) Energy Consumption

A Simplified Ethereum Hash Rate Model

Directional estimates of energy consumption

Kyle McDonald’s Bottom-Up Estimate

Increasing ASIC efficiency

Best guess and bounds

Timeseries Data of Ethereum Hash Rate (all chains)

Post-Merge Migration of GPUs to Other Blockchains

Profitability of GPUs Mining Ethereum Classic

The Jump in Bitcoin Hash Rate After The Merge

Introduction

Ethereum completed its much-anticipated transition from proof-of-work to proof-of-stake, colloquially known as The Merge, in September 2022. The difference in relative energy intensitivity of the two consensus mechanisms has been heavily covered by the mainstream media, but the data surrounding cryptoasset energy consumption is fraught with misinformation leading to claims that are often unfounded in reality.

The inspiration for this piece is a quote from Justin Drake suggesting that Ethereum’s transition away from proof-of-work eliminated 1/500th of global electricity consumption. Unfortunately the data doesn’t support the claim.

Models of Bitcoin’s Energy Consumption

Any conversation about cryptoasset energy use should start with Bitcoin. Not only is Bitcoin the most valuable cryptoasset, it also has the biggest and most studied energy footprint.

Cambridge Bitcoin Electricity Consumption Index (CBECI)

The Cambridge Bitcoin Electricity Consumption Index is widely regarded as the best tool for measuring Bitcoin's energy use, but it is not without flaws. On a very positive note, the assumptions used to construct the index are stated clearly and their data export tool allows for a fairly wide range of modification.

The two main limitations with the model are:

The model assumes an equal basket of mining devices for all profitable machines.

It would be an extremely challenging task to model the actual breakdown of mining devices, but with Bitmain and their line of Antminer devices being the industry standard, it would be appropriate to weigh their contribution more than smaller players.

The model assumes the average global price of electricity to be constant at $0.05/kWh.

This simplification of the model has historically been valid, but with global electricity prices soaring in 2022 the default assumption of $0.05/kWh creates a false appearance of profitability for less efficient machines—this in turn overestimates the electricity consumption of the network.

If we examine the CBECI data for various electricity cost assumptions, the divergence is very clear and it becomes evident that the output of the model is highly dependent on global energy prices.

Characterizing global electricity prices is a full project on its own, and as an extension I would urge anyone to both look at the CBECI data adjusted for global prices and to also create a version of the model with weights calculated through the Cambridge’s Mining Map which estimates the distribution of miners by country. If you want to work on this and have questions, please feel free to reach out.

The US Energy Information Administration publishes monthly electricity prices for all states, and if we chart the data the reason for this writing becomes apparent. The impact of the global energy shortage on the United States has been relatively tame compared to many parts of the world, yet industrial electricity prices have surged from their long-term average of below $0.07/kWh to nearly $0.10/kWh. Even states with abundant and historically cheap energy have felt the squeeze.

By combining Cambridge’s electricity consumption estimates with electricity data from the US Energy Information Administration, we can generate a potentially more accurate picture of current Bitcoin mining electricity consumption1. In the figure below the CBECI model is adjusted for both the average US industrial price, as well as for cheap electricity states, which for the purposes of this analysis simply discounts the industrial average cost by 27%.

The 27% reduction may seem arbitrary, but if we compare the model outputs to the historic estimates of the index, we see close alignment in modern periods before 2022.

The CBCEI estimate for November 9, 2022 was 103 TWh per year, but with the modification for cheap energy states, the current annualized consumption should be about 87 TWh per year2.

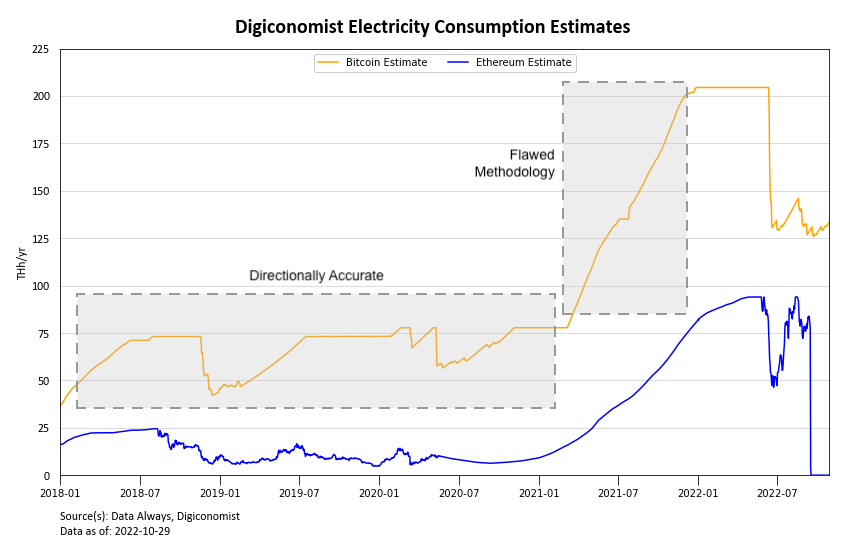

The Digiconomist Bitcoin Energy Consumption Index

The Bitcoin Energy Consumption Index created by Alex De Vries, also known as Digiconomist, is often cited by the mainstream media, but is a model that is widely disliked in the Bitcoin community

I take a less aggressive stance against the model than many others, and even believe it to have been a conceptually promising design, but the lack of real-world inputs handicaps the model’s ability to respond to industry shaping events. Although the model has traditionally been an overestimate, it was mostly directionally accurate for a large period of time until the Chinese mining ban in 2021.

As I understand it, Alex De Vries’s model is designed as follows3:

The model looks at dollar denominated mining revenue then, justified using a depreciation schedule, assumes that in equilibrium miners will be willing to pay 60% of their revenues in electricity costs. It then converts that 60% share of revenue into equivalent energy spend assuming a cost of $0.05/kWh and then slowly creates virtual mining machines inside the model until a long-term equilibrium is reached between electricity spend and the share of mining revenue.

One can argue about the duration of the depreciation schedule (Antminer S9s were visibly operational for far longer than De Vries’s assumed lifetime) and the model needs to be updated for the rising energy price global environment, but the equilibrium concept is intriguing and reflective of how actors might operate in the real-world.

Another big issue is that Bitcoin mining is a space ripe with inefficiencies including:

Non-economic actors like the Russian government who may be willing to mine at a loss to gain access to otherwise sanctioned digital currency.

Global restrictions like the Chinese mining ban in 2020 that will push the model quickly out of equilibrium and potentially invert the behavior vs the reality.

General reluctance of many entities to engage in the bitcoin mining space to push the outcome closer to an efficient equilibrium.

As of November 9, 2022, the Digiconomist electricity consumption model currently estimated the annualized power usage of the Bitcoin Network to be 118 TWh. This estimate is 14% higher than the default Cambridge model and 27% higher than if we apply an adjustment to the Cambridge model to account for rising energy prices in cheap US states. If we were to look at data from a few months back, the deviations would be much larger.

Although intellectually intriguing, the data demonstrates that the model has historically been inaccurate and shouldn’t be cited by credible institutions4.

A Simplified Bitcoin Hash Rate Model

One of the reasons that it is so hard to model the Bitcoin Network’s energy consumption is because of the drastic improvements in efficiency for mining equipment in recent years. For example, the difference efficiency between the Antminer S9 and the Antminer S19, which were released about three years apart is about 2.7x.

Instead of creating a time-dependent function to describe the distribution of hash rate powering the network, we can speak in eras and make broad statements about the majority of which generation of mining machines are overwhelmingly in use5. The three Bitmain Antminer devices in the figure below represent high-efficiency machines for their respective eras, but when describing S9 or S17 dominance there certainly were non-Antminer devices of that same era of efficiency representing significant hash rate.

The figure below models the power consumption of the network if we assume that all mining devices are of a singular model. By comparing the profiles with the CBECI model and the operating profit profiles below, we can gain additional insight into the transition between eras in Bitcoin mining history.

If we plot the operating profit margins for those same Bitmain bitcoin miners, assuming US industrial electricity rates, we see that the alleged changes in miner market share flow very logically with rising and collapsing margins. We’re now in an era where only the latest generation of machines, powered by electricity contracted at the lowest possible rates allows for profitable bitcoin mining. These collapsed margins are why we’re starting to see more bitcoin miners default, including massive players like Core Scientific.

In the modern era, all economic actors must run Antminer S17s or newer, which means that we can draw logical bounds on total power consumption via those two devices6.

Maximum (all S17s): 112 TWh

Minimum (all S19s): 85 TWh

The minimum value in this model lines up well with Cambridge’s value if we adjust it for rising energy costs, demonstrating that the long-term stable dynamic at this point is only machines of the S19 era and newer.

Models of Ethereum’s (Pre-Merge) Energy Consumption

Although Ethereum’s energy consumption was heavily scrutinized before it transitioned to proof-of-stake, the topic was far less studied than Bitcoin’s energy consumption. One of the main reasons for the lack of academia is the variety of mining options for Ethereum—the mining market never became dominated by ASICs because the transition away from proof-of-work was thought to be a year or two away for five years, meaning the research, design, and manufacturing of specialized machines wasn’t economical.

Comically, Bitmain released their first truly performant Ethereum ASIC in July 2022, only two months before The Merge7.

The Digiconomist Ethereum Energy Consumption Index

“The Ethereum Energy Consumption Index was designed with the same purpose, methods and assumptions as the Bitcoin Energy Consumption Index.”

The Digiconomist Ethereum Energy Consumption Index is the most often cited model for estimating Ethereum’s power requirements (including by The Ethereum Foundation), but the model is a cut-and-paste version of Alex De Vries’s Bitcoin model—which we showed above is unable to react to real-world events and became an extreme overestimate starting in mid-2021. As such, the model’s stated increase in electricity consumption in 2021 is unreliable and should be investigated.

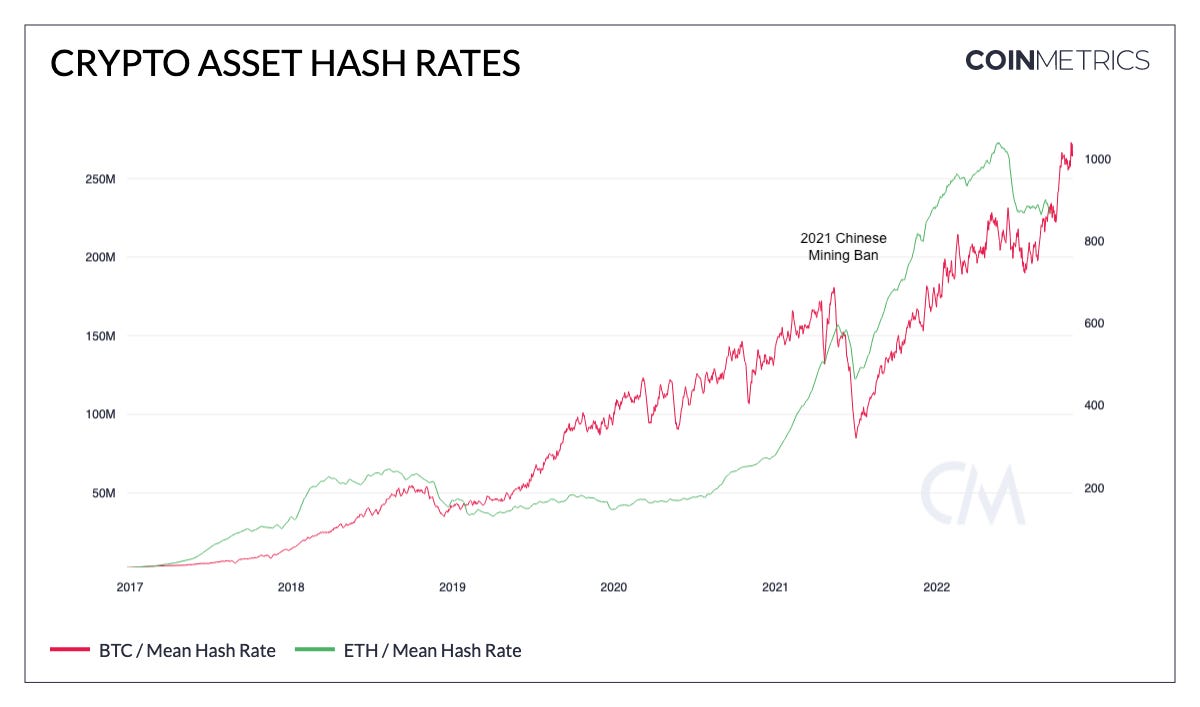

One strength of the Digiconomist Ethereum Index is that, if we look at comparative hash rate changes and recovery in the midst of the Chinese mining ban, we see that the drop off in Ethereum hash rate was far smaller and recovered much quicker than Bitcoin’s hash rate. This makes sense because Ethereum mining was comparatively less industrialized and more profitable—mining operations that had to shut down would have been incentivized to prioritize the resumption of their Ethereum mining operations.

A Simplified Ethereum Hash Rate Model

Because the Ethereum mining landscape was less optimized than the Bitcoin mining industry,the improvements in solving Ethash were slower or less pronounced than the improvements for Bitcoin ASICs.

Using data from WhatToMine, we can see that AMD GPUs improved in Ethash efficiency by about 150% from mid-2016 to late-20208. For comparison, Bitmain ASICs improved by about 400% during the same time period.

With data from WhatToMine we can create bounds and show that even if the Ethereum network were powered solely by six-year old GPUs that are considered to be inefficient, the electricity consumption at the time of The Merge would still not have been above 40 TWh per year - about half the power consumption estimated by Digiconomist.

Ethereum’s extreme hash rate growth between late-2020 and mid-2022 coincided with a bull market that saw extreme profitability for miners, but it also coincided with the launch of a new generation of GPUs from both AMD and Nvidia. It is likely that the majority of the hash rate that came online was these more efficient machines, the main question, in my eyes, becomes how much of the old-GPU hash rate was replaced and how much stayed online—as it largely remained profitable to mine Ethereum with these older cards.

If we assume that all the old-generation cards remained online, but that new hash rate growth was mostly new-generation cards with an average efficiency for the new cards similar to the Nvidia RTX 3090 seen in the figure, the network power consumption would be in the 25-30 TWh per year range. About one-third of the value estimated by Digiconomist.

Kyle McDonald’s Bottom-Up Estimate

The most comprehensive study that I’ve found on Ethereum’s energy consumption (and carbon footprint) was done by Kyle McDonald.

McDonald examines the evolving Ethash efficiency of GPUs and then examines mining pool data to deconstruct the mining inventory for individual pool members9. He then calculates the efficiency of the mining pool, which should skew retail heavy and less efficient than the industry average, to be 380 MH/kJ in late 2021.

After confirming this number with other measurements and accounting for losses in the mining process, McDonald uses a best guess and bounds approach to estimate the total power consumption of the network

Through these calculations, his model suggests that the all-time high for annualized power consumption was 40 TWh per year. His best guess for power consumption at the time of The Merge is 21.4 TWh per year, with upper and lower bounds of 15.5 to 30.1 TWh per year.

The Ethereum Merge

Ending the era of proof-of-work on Ethereum divested the network and token holders from the responsibility for future energy consumption and emissions, but years of highly profitable mining on the chain allowed for the roll out of an international coalition of GPUs that didn’t all shut off overnight—if they had then perhaps this would be an article about the surge in electronic waste.

The easiest group to compare are miners who shifted from the dominant Ethereum chain to mining Ethereum Classic and Ethereum PoW. These two networks initially saw an influx of about 30% of the main Ethereum chain’s hash power, but over the past two months the number has fallen to about 21%.

Mining that shifted to other Ethereum chains is only part of the story. It is a more complicated task to measure hash rate that shifted to other proof-of-work chains. One method to roughly estimate how many miners made the switch is to assume all mining on the main Ethereum chain was performed with a single type of GPU and then look at the comparative hash rates across chains. Pairing the relative hash powers with the jumps in hash rate on other chains after the Ethereum Merge, we can paint a broad picture of what equivalent share of mining devices is now working on other hashing algorithms.

I chose the Nvidia RTX 3090 to perform these calculations and generated the profiles below.

What we see is that nearly 50% of equivalent GPUs appear to have initially switched over to mining other cryptoassets, but with poor margins this number has now dropped to about 30%. These numbers include the Ethereum Classic and Ethereum PoW direct analysis performed in the section above.

Focusing in on Ethereum Classic which saw the largest influx of hash rate, mining immediately went from extremely profitable to extremely oversaturated. Even on attractive industrial electricity rates, mining Ethereum Classic is now a fruitless endeavor. Other GPU-minable proof-of-work chains are similar, so it seems likely that this 30% influx of mining machines will continue to fall until mining becomes profitable again.

It is my educated guess that, in the medium-term, altcoins could absorb about 10% of Ethereum's hash rate while remaining profitable, so there remains a lot of capitulation to be seen from former Ethereum miners.

Another factor that has not been largely discussed, although it has been mentioned on various podcasts by Nic Carter, is that Ethereum mining had been significantly more profitable than Bitcoin mining for quite some time. This naturally led many Bitcoin mining operations to begin to dedicate part of their industrial space and power to mining Ethereum. Once mining Ethereum was no longer an option, many of these facilities re-repurposed the space and electricity they were using to mine Ethereum, and replaced their GPUs with ASICs to mine Bitcoin.

The swapping of this mining machinery led to a surge in Bitcoin hash rate directly after The Merge which quickly plateaued once it was complete.

It’s impossible to attribute the entire increase in Bitcoin hash rate to The Ethereum Merge, but it likely played a not-insignificant role. The data is noisy, but the increase in Bitcoin hash rate was on the scale of 10%, likely representing 7 to 9 TWh per year in electricity. I’m really guessing at this point, but to minimize errors I would attribute half of the increase to the repurposing of industrial rack space: about 4 TWh.

Results

Ethereum’s annualized electricity consumption in the lead up to The Merge was in the range of 20 to 25 TWh per year.

Approximately 30% of Ethereum’s GPU hash power has been redirected towards other proof-of-work chains.

Approximately 2 to 6 TWh per year of electricity was potentially repurposed towards mining Bitcoin.

Therefore,

The Merge reduced global electricity consumption by 8 to 15 TWh per year.

With global electricity consumption estimated at 24,000 TWh per year, this suggests that The Merge resulted in a decrease in demand of 0.03 to 0.06%.

Although the decrease in global electricity consumption is less than many people suggested, it was still a crucial event in order to prevent further growth in electricity consumption. Had the transition away from proof-of-work not been a pending event diminishing the long-term demand for Ethereum mining, Ethereum’s power consumption and hash rate would have been much higher than their final values due to better profitability compared to Bitcoin mining.

Estimates from the Cambridge Centre for Alternative Finance are only available in full cent increments, so electricity consumption between data points was linearly interpolated.

The unadjusted US industrial average electricity price implies electricity consumption by the network of 69.5 TWh per year as of November 3, 2022.

Alex De Vries described my original description as:

“Looks mostly correct except one should distinguish between a short and long-term market equilibrium (as machine costs should be considered sunk cost in the short run), noting that in the short run electricity costs can easily exceed 60% (especially if the market is getting squeezed)”

I responded by adding the words “long-term” in the last sentence of my description.

De Vries's other models on electronic waste and energy user per transaction are also rather egregious deceptions:

For his electronic waste model, his assumed depreciation schedule is far shorter than network data suggests.

For his energy use per transaction model, he neglects to mention that transactions are capable of containing many operations or transfers, as well as ignoring that the energy use of the cryptoasset is currently disconnect from the number of transactions: bitcoin mining is currently heavily subsidized (95%+ of mining revenue), therefore if all transactions were to cease immediately the economic incentives for miners would be relatively unchanged. As such any measurements of energy use per transactions are not representative of the economic reality.

Who is responsible for Bitcoin’s emissions is more of a philosophical question than practical one right now, but the onus likely falls on all holders rather than on only transactors.

The Bitmain Antminer S9 actually had an interesting design flaw which left an identifiable trace in block-level data. This quirk was studied by Coin Metrics to demonstrate how much it dominated network share in 2018.

There are various non-economically driven actors participating in the network, but it is very difficult to measure their fractional contribution to the mining industry. My estimate would be about 5%. These actors are still incentivized to use newer machines, but may only have older machines available which they may be willing to run at a loss.

Bitmain released their first Ethereum ASIC, the E3, in July 2018 with an efficiency of 250 MH/kW—comparable to many GPUs of the same era. The E9, released in July 2022, has an efficiency of 1250 MH/kJ, which hashes at about 2.5x the efficiency of the latest generation of GPUs. The E9 would have become dominant in the space had Ethereum not transitioned away from proof-of-work.

As per WhatToMine, the improvement in Ethash mining for AMD GPUs was as follows:

(Jun 2016) AMD RX 480: 30 MH/s @ 140 W = 214 MH/kJ

(Nov 2020) AMD RX 6800 XT: 62.00 MH/s @ 110 W = 563 MH/kJ

(563 MH/kJ) ÷ (214 MH/kJ) = 2.63, ie: an efficiency improvement of 153%